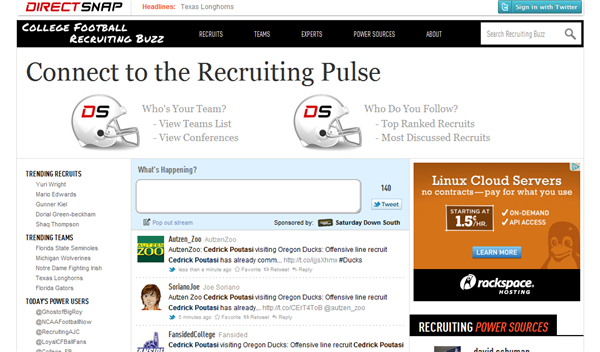

We’ve been working on a college football recruiting site called DirectSnap.com for a couple of months, and the most interesting aspect of the technology behind this site is the quality control algorithm I had to develop. Most of the tweet streams we work on, such as 2012twit.com, are based on collecting tweets for either a set of screen names or real names that are distinctive, such as politicians. When you find a match for Newt Gingrich or Mitt Romney, you can be fairly sure you have the right person.

In the case of DirectSnap, the tweet collection is based on the first and last name of 250 high school football players. Right away I knew I would have a problem when I found Michael Moore in the list of potential recruits. Randy Johnson was going to be even trickier, since the baseball player with this name was likely to be tweeted about by the same sports fans as the football recruit we were tracking. Identifying college teams is also tricky. For example, the word ‘Florida’ in a tweet with a player’s name could refer to the University of Florida or Florida State University.

The solution I came up with was creating a list of exclusion keywords for each player and team. If a tweet contains ‘Michael Moore’, but it also has words like fat, hypocrite, film, or liberal, it probably is not about the football player. A tweet with a player’s name is assigned to the University of Florida if it contains ‘Florida’, but not ‘Florida State’. This first level of screening did a good job of filtering out false positives, such as the wrong Michael Moore, but we wanted to curate the tweets automatically to select the highest quality. The goal was to end up with a tweet stream that was much more interesting than what you could get with Twitter’s search.

To do this we added a set of high quality words to the quality screen, like the team position or hometown name of each player. We found that tweets with this extra information was generally from users who were serious about reporting details, not just random fans chanting a player’s name repeatedly. We used these quality words in two ways. Each time a quality word was found in a tweet, 1 point was added to a quality score for the tweet and for the user who sent the tweet. This allows us to select tweets for display that have a minimum quality score, and that are from a user with a minimum quality score.

To see how well this system works, try comparing the DirectSnap page for Michael Moore, and Twitter search for the same words. My experience is that users find false positives very upsetting. They think computers actually understand what they are searching for, and when they see a false positive, the reaction is always that the website is “stupid”. My favorite example of this is when people complain about Google Alerts for their own name returning blog posts or tweets they have written. The reaction is usually “How stupid can Google be? Doesn’t it know that I don’t want to be alerted about my own writing?” On the other hand, they never seem to be upset about missing results. So ending up with a subset of all possible matches, but with no visible false positives is always the best goal.